The world’s top Go player Lee Sedol playing against Google’s artificial intelligence program AlphaGo during the Google DeepMind Challenge Match in Seoul, South Korea, March 2016.

The world’s top Go player Lee Sedol playing against Google’s artificial intelligence program AlphaGo during the Google DeepMind Challenge Match in Seoul, South Korea, March 2016.

Part 1

1.1.

Unlike most areas of scientific study, artificial intelligence (AI) research has led a bipolar existence, alternating between periods of manic ambition and depressive self-loathing. The sinusoidal history began on a peak in 1956, during a summer conference at Dartmouth, where the founding fathers of AI named their field and outlined its goals. The conference gathered the best names in nascent computer science, including Claude Shannon, Marvin Minsky, and John McCarthy. They promised, given a “two month, ten man study,” that they would be able to make “a significant advance” on fundamental AI problems. Problems included: how computers can use language, how “hypothetical” neurons could be used to form concepts, and the role of self-improvement in computer learning. No significant technical progress was made that summer, and when it came to fundamental problems, little more was made during the following decades. To date, AI research has accomplished few of its deeper ambitions, and there is doubt as to whether its modest successes illuminate the workings of the simplest animal intelligence.

However, when it comes to trivial pursuits—in particular, games—AI has accomplished more, albeit decades later than planned. Computer science can measure advances in gaming in two ways: first, by whether a game has been “solved”—mathematical proofs for predicting optimal outcomes of perfect play—and second, by playing champions against computer programs. The former technique, though computer-aided, is fundamentally mathematical, while the second is contingent upon the quality of available players. One does not necessitate the other, and while solving games mathematically might be more intellectually rigorous, beating a world champion in a high-profile match gets better publicity. World-class competitive checkers programs, for example, emerged in the 1990s, but the game was only “weakly” solved in 2007. Chess remains partially solved, maybe permanently partially solved, but the best human player, Garry Kasparov, famously lost to IBM’s Deep Blue in 1997.

This past year, DeepMind, a British artificial intelligence company owned by Google, announced it had created a computer program, AlphaGo, capable of beating a professional Go player. Go is a 2,500-year-old game, and unlike chess, Go was considered too complex for artificial intelligence to master. It also will remain, most likely, unsolved. Go has few rules—around ten in total—that are played out on a grid of 19 by 19 lines. However, although Go’s rules are simple, it is a much more difficult game to calculate than other board games, both because of the large number of possible moves and the difficulty in determining a piece’s value at any one time. Because of this, Go is often considered a strategic, but intuitive, game. A Go master is able to name a good move and reason about why it is a good move, but the formal mathematical quantification of that move’s superiority is far more difficult. Chess, with more rules but fewer possible moves, can be more easily calculated and searched by a machine. IBM’s Deep Blue, for example, could search through available moves at the rate of 200 million moves per second. Even given contemporary computing speeds, a Go-playing program with the same search algorithm would be unable to thoroughly evaluate the number of possible moves at any one time. A Go-playing machine would have to be able to play intuitively, something no computer can do; and until the creation of AlphaGo, AI researchers believed a Go-playing program capable of beating a master was impossible.

That was until this last October when AlphaGo beat the European champion, Fan Hui, ranked 663rd in the world. Observers qualified their praise, however: DeepMind’s program was certainly an accomplishment, but a sizeable population of Go champions could, in theory, beat it. A second series of matches was scheduled for the following March in Seoul, South Korea, against the world’s top player, Lee Sedol. While tens of millions watched on television, AlphaGo went on to win the tournament, winning four of the five games. The win represented two firsts in AI history: a major goal was reached far before anyone thought it possible, and human players no longer dominated one of the oldest and most respected board games.

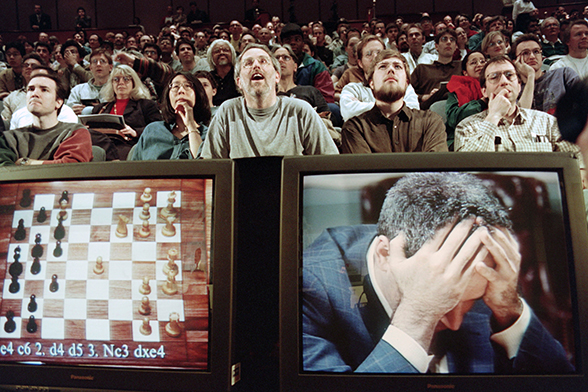

Garry Kasparov holding his head at the start of the sixth and final match against IBM’s Deep Blue computer, New York, 1997. Photo: © Stan Honda / AFP / Getty Images

Garry Kasparov holding his head at the start of the sixth and final match against IBM’s Deep Blue computer, New York, 1997. Photo: © Stan Honda / AFP / Getty Images

1.2.

If a fictional computer scientist were to build a machine capable of writing a novel, she might first break apart the novel into its atomic parts, dividing and subdividing narratives into sentences, sentences into grammar, grammar into phonemes. Our fictional computer scientist—she is, say, a cognitive scientist—would first need to know how natural language works, its rules and structure, its generative capabilities. Simultaneously, she and her team would diagram narrative structure, understanding the simple rules that give rise to larger and more complex forms of storytelling. From there, she would perhaps construct a general anthropology of readership and culture, to understand exactly why a story appeals to an audience. The computer scientist would need teams of various linguists, programmers, anthropologists, historians, literary theorists … a cadre of researchers committed to understanding scientifically what no one has come to understand fully: how literature works.

A second computer scientist would take a different approach. Instead of trying to understand what is impossible to know, the second scientist would build a computer that could learn on its own. The computer would begin as a tabula rasa; it would not understand what a phoneme or a sentence or a novel or a marriage plot is. The only thing it would be programmed to do is to recognize patterns and to classify these patterns. Perhaps it would be guided by human input, or perhaps it wouldn’t need any human assistance. After looking at millions of data points—grammar books, billions of internet pages, best sellers, magazines—it would form an idea of what an English sentence looks like, and what shape, say, a detective plot might take. Perhaps the machine’s learning would be supplemented by a reward system in which good narratives, award-winning narratives, would be given higher ratings than potboilers. Unlike our first computer scientist, our second scientist has only one task: to build a computer capable of learning, of responding to rewards, a silicon version of radical behaviorism in which our artificial novelist learns to write only through repetition and reinforcement.

The methods employed by our two fictional scientists have been, depending on the decade, the most popular techniques in AI research. Symbolic AI, often called “Good Old-Fashioned Artificial Intelligence” (GOFAI) once dominated the field; though, as its longer moniker implies, GOFAI no longer holds the status it once did. In the beginning, the reasoning behind GOFAI was somewhat sound, if oblivious, to its own ambition. Since a computer is a universal manipulator of symbols, the reasoning went, and since mental life is involved in the manipulation of symbols, a computer could be constructed that had a mental life. This line of thought led to the creation of byzantine “knowledge bases” and ontological taxonomies, comprised of hierarchies of objects and linguistic symbols. Computers were taught that space had direction and balls were kinds of objects and that objects like balls could be moved in space from left to right. Robots were constructed that moved balls, and when some new task was needed of the robot, new ontologies and taxonomies were dreamt up. What didn’t cross anyone’s mind, apparently, was that the amount of symbolic data needing classification was unknown, maybe infinite, and the very classification of this data was socially constructed, i.e. it changed over time and space. Even more devastatingly, although the brain may work somewhat like a silicon-based computer, it is in many instances unlike one—especially in its very construction, which is massively parallel and, unlike a computer, is not based on Boolean algebra.

This led scientists to reverse the analogy: instead of believing that brains worked like computers, why not build computers that worked like brains, with silicon “neurons” that worked in parallel and reinforced their own connections over time. This “connectionist” movement rose and fell over the twentieth century, but today it is generally considered the most successful of all fields of AI research. Our second scientist, the behaviorist, belongs to the connectionist movement, and her tool of choice is an artificial neural network. Neural networks are trained on sample data or repeated interactions with a given environment; either training or reinforcement guides their learning. Networks can be adapted to particular data without engineers having to know much about the data ahead of time. This makes neural networks extremely good at tasks involving pattern recognition, for example. Neural networks can be trained to recognize cats in photographs by feeding millions of images of cats and non-cats into the program. Unlike symbolic AI, no engineer has to figure out what makes a cat look like a cat. In fact, an engineer can learn from a machine what makes a cat likeness cat-like. And whereas rule-based (heuristic) searches of possibility trees work well for mastering chess, neural networks work much better for other types of games, including Go.

It’s obvious, then, why neural networks, and machine learning in general, are DeepMind’s specialty. Several years before AlphaGo, DeepMind created a program capable of mastering any Atari 2600 video game. Released in 1977, the Atari 2600 was an extremely popular home video game console, for which hundreds of games, including Pac-Man, Breakout, and Frogger, were released. Before playing, DeepMind’s program knew nothing about any of these games. During play, it was only given screen pixel information, the score, and joystick control. Its only task was to increase the score, and, depending on the game, not be killed. The program did not understand how the controls worked or what it was controlling. It began playing through trial and error, a gaming marathon lasting thousands of games, with each generation remembering ever better patterns for conquering the program’s new 2D world.

Google DeepMind’s Deep Q learning playing Atari’s Breakout

A video shows the DeepMind program playing Breakout, the classic arcade and home video game built by Steve Wozniak and Steve Jobs. The goal of the game is to use a ball to tunnel through a wall occupying the top portion of the screen. The ball drops from the wall to the bottom of the screen, and the player must use a paddle to bounce the ball back up to the wall. If the ball reaches the bottom of the screen, the player’s turn is over. In the first ten minutes, the program’s play is awful, nearly random. The paddle shivers left and right uncontrollably; whenever it connects with the ball, it seems to be accidental. After 200 plays, one hour of training, the computer is playing at a poor level, but it clearly understands the game. The paddle occasionally finds the ball, though not always. At 300 plays, two hours, the program is playing better than most humans. No balls get past the paddle, even those bounced at angles that seem impossible to reach.

DeepMind researchers could have stopped play at this point and moved on to a different game, but they left the program running. After two more hours of expert play, the program did something none of the researchers predicted: it discovered a novel style of play in which it used the ball to tunnel through one side of the wall. When the ball shot through the newly created tunnel, it ricocheted behind the wall, clearing out the wall from behind. It was an elegant and efficient style of play, one that showed an extreme economy of means. It also was not anticipated by the DeepMind’s engineers; it was an emergent style of the program’s learning,

Was this style of play inevitable? If we were to duplicate DeepMind’s Atari player—making two, three, more—and if we were to then put the programs to work in parallel, would each discover the same tunneling strategy? Is tunneling a creative strategy or is it the result of a search for optimal play? Would two or three different programs evolve different styles of play, or would their play always be honed down to the most efficient play? In a simple game like Breakout, it is easy to imagine that efficiency, and not creative style, would define expert play. The program is simply trying to find a global maximum, i.e. the best score with the least amount of effort.

In an extremely complex and aesthetic game like Go, however, expert creativity and style are essential to grandmaster play. In order to achieve this, DeepMind used a combination of neural networks and tree search algorithms, the latter being more traditional to gaming AI. The training period began with the processing of 30 million moves of human gameplay, but it concluded with AlphaGo playing thousands of games against itself. The latter period meant that AlphaGo could develop its own style of play and would not rely simply on regurgitating moves from a database.

This was most evident during the second match between Sedol and AlphaGo, when, on move 37, the computer played a very unusual move. There had been several odd moves in the tournament up until that point, but move 37 caused one of the English-language live commentators, top Go player Michael Redmond, to squint in disbelief at the match’s live video feed. “That’s a very surprising move,” he said, his head pivoting back and forth between the board and the monitor to make sure he had not gotten it wrong. At that moment, a clearly rattled Sedol left the room; he returned a few minutes later and took 15 minutes for his next move. The commentator was clearly impressed by AlphaGo’s move, and did not see it as a mistake. Speaking to his co-commentator, he said:

I would be a bit thrown off by some unusual moves that AlphaGo has played. … It’s playing moves that are definitely not usual moves. They’re not moves that would have a high percentage of moves in its database. So it’s coming up with the moves on its own. … It’s a creative move.

1.3.

AlphaGo is not self-aware. Its intelligence is not general purpose. It cannot pick up a Go stone. It cannot explain its decisions. Despite these limitations, AlphaGo’s moves are not only competent, they are also intimidatingly original. Paradoxically, however, AlphaGo is at its most intimidating when it makes a mistake. It is unknown whether move 37 was a software error or a rare misstep in an otherwise perfect game. Conversely, it could have been an example of superhuman play, a brilliant insight made by a machine unrestricted by social convention. Or maybe the move was intentionally random. Perhaps AlphaGo, a program with no psychology, picked up a secondhand understanding of psychological warfare. AlphaGo could never know that its move would cause Sedol to leave the room, but the machine might have understood what effect the move would have on its opponent’s play.

There is a precedent for this kind of unsettling move. At the end of the first 1997 match between Deep Blue and Garry Kasparov, the computer’s 44th move placed its rook in an unexpected position. Deep Blue went on to lose the game, but the move was so counterintuitive—bad, perhaps—that Kasparov spent the next game thinking Deep Blue understood something he did not. Distracted, Kasparov lost the second game. Later interviews by statistician Nate Silver revealed that the move was the result of a software bug. Not able to choose a move, Deep Blue defaulted to one that was purely random. Kasparov worried about the move’s superiority when, in reality, it was the result of nothing but chance.

In his book, The Signal and the Noise, Silver argued that Deep Blue’s random move should not be considered creative. He contrasted the move with Bobby Fischer’s knight and queen sacrifices made during his 1956 match with Donald Byrne (a.k.a. “The Game of the Century”). Fischer, thirteen years old at the time, cunningly tricked Byrne into taking his two important pieces, luring the older master into a trap unprecedented in the history of chess. No computer, argued Silver, would have made such sacrifices. Silver is right: the heuristics of computer chess are biased toward conservative moves. A computer would have no need to be as daring and flashy as the young, brilliant Fischer. But AlphaGo is not using simple heuristics. Its neural networks were built not to repeat past plays, but to create new strategies. This doesn’t leave out the possibility for a bad move, but it reduces the chance of pure randomness, as was the case with Kasparov. In other words, AlphaGo’s usage of a neural network increases the likelihood that the 37th move was novel and creative, albeit perhaps not a work of brilliance.

It’s tempting to think that Sedol’s anxiety, like Kasparov’s, was caused by his inability to distinguish between brilliance and error. The commentators took the move very seriously, even though one initially admitted, “I thought it was a mistake.” The fact that AlphaGo built a winning game around the position was used as proof that the move was creative and skillful, but this could be a post-hoc rationalization. An alternative explanation would be that AlphaGo made a poor move, but recovered its gameplay subsequently, smoothing over the mistake with superior play that caused, in hindsight, the move to look better than it was.

No matter what the reality, move 37 condenses the complex undecidability of machine creativity into a single moment. But what if the problem is more decidable than it appears? What if we were to say that an author, or a living being, is not needed for creativity, and that the real problem is our insistence on the primacy of an author? The position is not as absurd as it might sound, especially when one considers that Darwinian natural selection is purposeless, directionless, and creatorless, yet it is the most creative force known to science. Natural selection is what got us here; it has even created the brains of the computer scientists that designed AlphaGo. So if intentionality is not required for formal creativity, and given that natural selection is the source of human intelligence, then artificial creativity can be seen not as an artificial copy of true creativity, but as a continuation of natural selection’s creativity by other means. Put another way, “mistakes” in nature—mutations—are what drive evolution, and just as adaptations come about through happenstance, it might not matter whether a winning move by AlphaGo is accidental or not. All that matters is that the move worked. And just as machine learning preserves past successes—accidental or not—in memory, natural selection registers past success through genetic inheritance, building complex organisms out of millions of lucky mistakes. Thus evolution, contrary to many popular assumptions, is not a purely random process, but one that uses information storage as a bulwark against circumstance, keeping the good accidents and discarding the bad. In this way, random software errors or examples of surprisingly bad play that nonetheless work can be seen as operating like genetic mutation and drift: as long as an error works to a program’s advantage within a particular context, it does not matter whether it was intentional.

But perhaps the best way to understand machinic creativity is through its strangeness; like natural selection, artificial creativity is perhaps at its strongest when its workings are least familiar. As Go master Fan Hui said about move 37: “It’s not a human move. I’ve never seen a human play this move. So beautiful.”

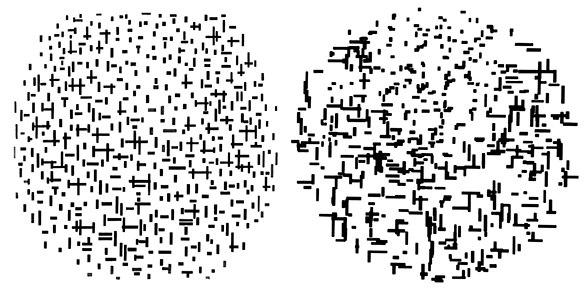

Left - Piet Mondrian, “Composition in line, second state,” 1916-1917. © Collection Kröller-Müller Museum, Otterlo. Courtesy: Collection Kröller-Müller Museum, Otterlo. Right - A. Michael Noll, “Computer Composition With Lines”, 1964. Created with an IBM 7094 digital computer and a General Dynamics SC-4020 micro-film plotter. Photo: © A. Michael Noll

Left - Piet Mondrian, “Composition in line, second state,” 1916-1917. © Collection Kröller-Müller Museum, Otterlo. Courtesy: Collection Kröller-Müller Museum, Otterlo. Right - A. Michael Noll, “Computer Composition With Lines”, 1964. Created with an IBM 7094 digital computer and a General Dynamics SC-4020 micro-film plotter. Photo: © A. Michael Noll

Part 2

“The unconscious is inexhaustible and uncontrollable. Its force surpasses us. It is as mysterious as the last particle of a brain cell. Even if we knew it, we could not reconstruct it.”

–Tristan Tzara

“You insist that there is something a machine cannot do. If you tell me precisely what it is a machine cannot do, then I can always make a machine which will do just that.”

–John von Neumann

2.1.

Fifty years ago, A. Michael Noll, a Bell Telephone Laboratories engineer, presented one hundred test subjects with two pictures and a questionnaire. The subjects, mostly Bell Labs colleagues, were divvied up as any self-respecting engineer might, into “technical” and “non-technical” categories. The former category included physicists, chemists, and computer programmers. The latter was made up of everyone else: secretaries, clerks, typists, and stenographers. The gender division was what might be expected for a corporate research center in 1966: mostly men for the technical group, mostly women for the non-technical. Though a portion of the respondents liked abstract art, all subjects were, in Noll’s words, “artistically naïve.”

The twin pictures shown to the subjects were black-and-white photocopies of nearly identical abstract paintings. The paintings were geometrically abstract, their style typical of that pioneered by the Neoplasticists almost 50 years prior. Both paintings were composed solely of short horizontal and vertical black lines, most of which were arranged into T- and L-shapes. The lines were contained by an invisible circle; the circle itself was cutoff at its four outermost sides, giving the composition a circular and compressed appearance. The distribution of lines in both paintings was similar, but not exactly the same. In both pictures, the T- and L-shaped lines clustered mostly at the left, bottom, and right sides of the containing circle, creating a crescent in negative space. Perhaps the paintings were a diptych; maybe one was a slightly inaccurate copy of the other. The accompanying questionnaire clarified: “One of the pictures is of a photograph of a painting by Piet Mondrian while the other is a photograph of a drawing made by an IBM 7094 digital computer. Which of the two do you think was done by a computer?”

The Mondrian painting was his 1917 Composition with Lines. The IBM 7094 picture was titled, Computer Composition with Lines. Few respondents were able to tell “which was done by a computer.” This was as true for respondents who claimed to like abstract art, as it was true for those who claimed not to like abstraction. Oddly, though, a strong preference for abstract art indicated a weaker ability to identify a computer-generated painting. Twenty-six percent of the abstract art enthusiasts correctly identified the computer painting, in contrast to the 35% of those who disliked abstract art. Noll also asked the subjects which painting they preferred aesthetically. Sixty percent of the subjects preferred the computer painting, and again this correlated strongly with a preference for abstract art. Mondrian, it seems, did very poorly even among his own potential enthusiasts.

Mondrian’s Composition with Lines, one assumes, was made in the typical manner: hours of studio time, oil paint and brushes, concentration, false starts, fitful developments, final breakthrough. As far as we know, the painting’s horizontal and vertical lines found their place on the canvas due to Mondrian’s sense of pictorial equilibrium, not a chance operation outsourced to the I Ching or roulette wheel. No one is certain, of course, what led Mondrian to place a particular line at a particular coordinate on the picture plane. We only have assumed intentions, assumptions that tend toward, as the critic tells us, the fallacious. Noll puts it best: “Mondrian followed some scheme or program in producing the painting although the exact algorithm is unknown.”

With Computer Composition with Lines, the exact algorithm is known. It has to be; computers are only ever deliberate. For the Mondrian program to work, every step of the algorithm must be spelled out, instruction for instruction, a picture first written. 1

How, though, could one build an algorithmic Mondrian? Noll understood Mondrian’s general techniques for painting Composition with Lines, but the crucial details needed definition. Why was a line placed here, but not there? What determined the length of any given line? The answer, of course, was Mondrian. Without the painter, Noll turned to randomness.

2.2.

For a moment, let’s indulge in a fiction. The fiction, which has several parts, concerns machines. For the first part, we have to believe that only humans make machines. This seems easy enough. After all, animals rarely use tools, and none makes machines. The second part of the fiction is also easy to believe. It says that living beings are not machines. It says, as an unembarrassed neo-vitalist might, that organisms cannot be explained through mechanical principles. Although long discredited, most non-scientists still believe that an élan __vital separates the living from the inanimate. For now, let’s indulge our vitalist biases: there is nothing machinic about life. Finally, we will need to narrow our definition of a machine to the very literal, and avoid all metaphoric uses of the term. Societies cannot be machines. Ecologies cannot be machines. Desire cannot be a machine. Machines are what we naively expect them to be: human-made mechanical systems requiring energy and exerting force. Steam engines, printers, clocks, film cameras, even pendulums and gears. If we are to believe this reduced, fictional definition of a machine we must admit, above all, that all machines are also deterministic. Machines will only do what they are designed to do, no more. Once a machine’s mechanisms are constructed, its functions are forever fixed. Without human intervention, a steam engine will never become an oil drill; an automobile will never become a camera. In more complex machines, these deterministic functions are decomposable into even more basic mechanics, mechanics that can be precisely described by mathematical physics. Every machine therefore comes with its own accounting, its own strict physical limitations, energy restrictions, productive capabilities. As philosopher Georges Canguilhem wrote in “Machine and Organism”:

In the machine, the rules of a rational accounting are rigorously verified. The whole is strictly the sum of the parts. The effect is dependent on the order of causes. In addition, a machine displays a clear functional rigidity, a rigidity made increasingly pronounced by the practice of standardization.

If machines are strictly deterministic, standardized, if they are never more than the sum of their parts, then it is easy to deny machines creative agency. A deterministic system can only produce the same results; it can only be a medium for creativity. As an example, take the harmonograph—a simple machine that uses two or more pendulums to produce intricately curved line drawings. As the pendulums oscillate, their force moves mechanical arms that, in turn, draw pens across a fixed piece of paper. The resulting drawings are the result of gravitational physics, not a human hand or mind. The physics are, by definition, repeatable and mathematically describable. If there is any creativity to speak of in a harmonographic drawing, it is due to the harmonograph’s inventor or assembler rather than the mechanical arms moving across the paper. The harmonograph would not be due royalties on its work, and cannot sue other harmonographs for copyright infringement. Like a camera, the harmonograph has no agency of its own; it can only be considered a medium.

This holds true for computers as well. However, computers are also a special class of machines. Computers are not only subject to their own deterministic mechanics, but they can also emulate the mechanics of any other machine, as long as those mechanics are mathematically describable. With some work, our harmonograph could be codified into a program, and the program, equipped with a basic physics engine, would produce drawings identical to the physical harmonograph’s. Like the harmonograph, the program is deterministic. It has no agency. It cannot transcend its own logic. Likewise, if a computer can produce a picture in the style of Mondrian, it is only because Mondrian created the template for that emulation. The computer is incapable of arriving at Neoplasticism on its own.

It is interesting, then, that Noll chose randomness as the quality by which Computer Composition would both distinguish itself from Mondrian as well as outdo Mondrian at his own game. In Computer Composition, all line placements were selected randomly. The widths could be between 7 and 10 scan lines; their lengths could be anywhere between 10 and 60 points. (The scan lines and points describe the vertical and horizontal axes of the microfilm plotter’s cathode ray tube.) Any line falling inside the parabolic region at the top of the composition was shrunk in proportion to its distance from the parabola’s edges. With some trial and error, Noll could make a Mondrian whose line distribution was conceivably determined by the painter.

Noll believed that his study’s true subject wasn’t sociological taste—being educated enough to recognize a Mondrian or a fake—but how audiences responded to randomness. On the one hand, he wrote, enthusiasts of abstract painting not only tolerated compositional randomness, they sought it out. A more random Mondrian was a better Mondrian. Conversely, for those who were not familiar with computers or abstract painting, a more orderly, less random, picture was associated with a computer. Therefore, they guessed that the more orderly painting, the Mondrian, was made by a computer, and the more random painting was made by a human. Both agreed on one thing: randomness and creativity were linked. Both groups saw randomness as the one quality a computer could not achieve; and although they may not have known why, both groups were right.

2.3.

From the beginning, AI has been concerned with transcending the deterministic limitations of machines. This applies to all forms of decision-making, let alone creativity. Although rule-based approaches to AI dominated the field for decades, it became apparent that programs were only as good as their informational ontologies. Most importantly, these programs had limited ability to learn; they could only travel through predefined decision trees. Rule-based AIs might produce competency, but it would never produce creativity.

Like so much else in AI, the distinction between competency and creativity can be found in the 1956 Dartmouth proposal. In it, alongside most areas of AI research for the next half-century, the authors suggested investigating “Randomness and Creativity.” They wrote:

A fairly attractive and yet clearly incomplete conjecture is that the difference between creative thinking and unimaginative competent thinking lies in the injection of some randomness. The randomness must be guided by intuition to be efficient. In other words, the educated guess or the hunch include controlled randomness in otherwise orderly thinking.

Almost simultaneously to the Dartmouth conference, in a very different part of society, artists were looking to randomness to break with traditional notions of creativity. At first, the two groups seem completely opposite: naïve computer scientists expecting roulette wheels to generate Michelangelos, and avant-gardists trading in aesthetic refinement for aleatory anarchy. But the two have more in common than they may have thought. Artists and composers like John Cage, Jackson Pollock, Robert Motherwell, Morton Feldman, and Iannis Xenakis, used randomness to undermine conscious thought. Consciousness, as they saw it, had ossified creativity. Creativity had become, in a word, deterministic.

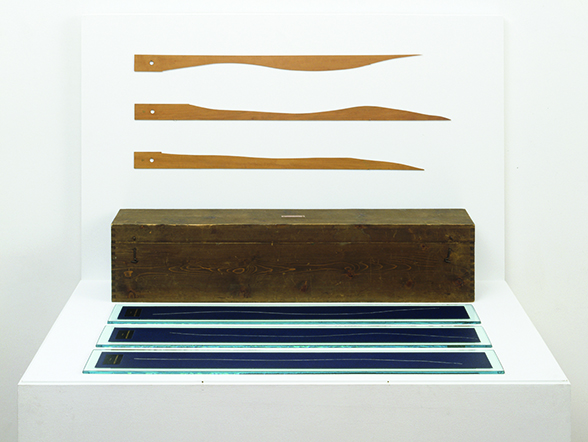

Marcel Duchamp, 3 stoppages étalon (3 Standard Stoppages), 1913-1914 (replica from 1964) Marcel Duchamp 1887-1968. © Succession Marcel Duchamp by SIAE, Rome, 2016. Photo: © Tate, London, 2016

2.4.

One year after the Dartmouth AI conference, the artist, writer, and scientist George Brecht wrote “Chance-Imagery,” an overview of chance operations in Modernist art. (Although written in 1957, Brecht did not publish the essay until 1966.) The essay draws equally on Brecht’s scientific knowledge as a research chemist and his developing interests as a conceptual artist. Unlike many of the Modernist manifestos it quotes, “Chance-Imagery” is a theoretically heterodox work. In it, Brecht mixes Freudian free-association with D. T. Suzuki, Dadaism with thermodynamics, A Million Random Digits with Surrealist poetry.

Brecht divided the continent of chance into two territories. The first was automatism: streams of consciousness, snap judgments, non-rational associations. The second was mathematical and physical chance, from the roulette wheel to tables of random numbers. Both types of chance, for Brecht, were an escape from bias. As Marcel Raymond wrote, the unconscious does not lie. For André Breton, the unconscious was the factory of the marvelous. Jean Arp believed that chance granted him “spiritual insights.” The soaring rhetoric was common: Modernist artists may not have been the first to use chance operations, but they were among the most ferocious in elevating it to almost religious heights. The “pioneer work” according to Brecht, was Marcel Duchamp’s 3 Standard Stoppages. Made during 1913 and 1914, Duchamp—limiting his tools to wind, gravity, and aim—dropped one meter of thread from a height of one-meter onto a blank canvas. He fixed the thread to the canvas, cut the canvas along the edge of the curved thread, and cut a piece of wood along one edge to match the curved thread. He then repeated the process two more times. Finally, the six pieces of glass and wood were fitted into a custom wooden box. The result, Duchamp said, was “canned chance.”

Duchamp would not be chance’s only 20th-century canner. Chance would at least be printed and bound, too, as scientific researchers required greater and greater quantities of random numbers. Although randomness was in high demand in industry and academia, it was increasingly difficult to come by. If one needed thousands of random numbers, traditional techniques for generating them—coin tosses, dice rolls, etc.—would be tedious. Even worse, researchers knew how biased many physical processes could be: a fair coin toss that does not favor one side or the other is harder than it looks. To meet demand, research institutes produced books of random numbers, many of which are still in use today. Brecht, whose day job for many years was as a professional chemist, knew this industry well, and his essay is one of the few to deal with the arts and chance that mentions the small industry of random number production. The most well known book was the RAND Corporation’s 1955 A Million Random Digits with 100,000 Normal Deviates. The publication is a kind of classic of the genre, with several hundred pages of random numerals ready to be selected by statisticians, pollsters, computer scientists, and other professions in need of aleatory input. The second book was the 1949 Interstate Commerce Commission, et al.’s Table of 105,000 Random Decimal Digits. To produce the 1949 book, the ICC used numerical data selected from waybills, a process Brecht compared to the methods of the Surrealist cadavre exquis. The RAND corporation book was generated using an electronic roulette wheel, a custom device created by Douglas Aircraft engineers having little to do with an analog roulette wheel. RAND’s device used a noisy analog source—a random frequency pulse generator—to generate the numbers. Both techniques have one thing in common: they did not rely on computational means to generate their random numbers, and that is because computers can never produce randomness. Contrary to the Dartmouth proposal, computers cannot “inject” randomness into thinking. They cannot produce a random question, if asked. In principle, they cannot fairly shuffle a virtual deck of cards. The reason for this has already been mentioned: a computer is only ever deterministic, and no deterministic operation can produce a random number. As the mathematician John von Neumann wrote: “Anyone who considers arithmetical methods of producing random digits is, of course, in a state of sin.”

Sinful or not, computational randomness happens all the time. Online casinos shuffle virtual card decks millions of times a day. Every millisecond, cryptographic protocols use random numbers to encrypt computer-to-computer communications. How is this done? If computers are incapable of randomness, from what source does a computer generate randomness? One way of producing randomness computationally is to avoid arithmetical methods altogether. If one needs a random number, one must find it outside of a computer system. In some cases, casino software makes a call to a computer hooked up to a physical, truly random system such as atmospheric noise or radioactive decay. (Atmospheric noise is what Random.org, the modern successor to A Million Random Digits, uses.) However, although random physical systems guarantee true randomness, they can be slow and incapable of meeting high demand for random numbers. A second, more scalable though imperfect method is to use a pseudorandom number generator (PRNG). A PRNG is a program that operates on von Neumann’s bad faith; it attempts to make a deterministic mathematical process produce an unexpected result. One of the first PRNGs, suggested by von Neumann himself, was the middle-square method. The middle-square method is almost useless for generating random numbers, but it does serve as an easy introduction to how a (poor) PRNG works. To use the middle-square method, first choose a four-digit number, square that number, take the middle four digits of the eight-digit result, and use those four digits as your random number. If you need another number, repeat the process starting with your new number. It is not hard to see how one might run into problems with this procedure, especially if the middle four numbers all turn out to be zero. Even worse, in only a few generations, it quickly becomes apparent that the middle-square method produces more of some numbers than other numbers. The middle-square method, to use Brecht’s expression, is hopelessly biased.

So much for machines. But what about humans? Can a human produce random numbers? In other words, if a person were asked to come up with a long string of random numbers, if the person did not think and was asked to blurt out these numbers, perhaps operating under the spell of the unconscious, would every digit be as likely to occur as every other? According to Modernist artists, the answer should be “yes”—after all, chance operations, especially those of the Dadaist kind, were meant to free the artist from bias. As Brecht wrote of the Dadaists, if the unconscious is free from “parents, social custom and all the other artificial restrictions on intellectual freedom,” then it should be able to do exactly what a deterministic machine cannot do: generate randomness.

Unfortunately, whatever the unconscious may be, it is not unbiased. It may be, in fact, more deterministic than conscious thought. Brecht, the professional chemist, knew that across scientific fields unconscious and reflexive behavior have been shown to be patterned, if not deterministic, offering no respite from social conditioning. Starting at the beginning of century, Freud’s The Psychopathology of Everyday Life,suggested that the unconscious was a poor random number generator. (“For some time I have been aware that it is impossible to think of a number, or even of a name, of one’s own free will.”) Soon thereafter, Ivan Pavlov showed that the reflexes could be conditioned and deconditioned. By mid-century, as Brecht writes, statisticians proved that human test subjects showed bias even when selecting wheat plants for measurement. For scientists, the unconscious is deeply deterministic, more an automaton than automatic.

Claude Shannon’s Mind-Reading Machine, 1953, “Codes & Clowns” installation view at Heinz Nixdorf MuseumsForum, Paderborn, 2009. Object on loan from MIT Museum, Cambridge, MA. Photo: Jan Braun

Claude Shannon’s Mind-Reading Machine, 1953, “Codes & Clowns” installation view at Heinz Nixdorf MuseumsForum, Paderborn, 2009. Object on loan from MIT Museum, Cambridge, MA. Photo: Jan Braun

Claude Shannon, the father of information science, invented games and machines to illustrate this determinism. Shannon played one such game with his wife, Mary Elizabeth “Betty” Moore, also a mathematician. The game involved Shannon reading aloud to Moore one randomly selected letter from a detective novel. He then asked her to guess the next letter in the sentence. After telling her the right answer, she then guessed the following letter, which he revealed to her, and they worked their way though the book, letter-by-letter. Most probably she got the first letter of a word wrong, but as more of the word was revealed, the chances of guessing the next letter increased. This is because the probability of any character drops significantly as a word is revealed. The letter P could be followed by many letters, but the string “probabl” will most likely be followed by an E. Letters tended to have statistically probable groupings, so if the letter was Q, Moore could almost be certain the next letter would be U. What Shannon was formalizing in his research was something we all know intuitively: English, like all languages, is statistically patterned, and we have internalized these patterns, though we might not be able to consciously apply numbers to the probability.

A second game, Mind-Reading Machine, required a custom-built mechanical device with a button and two lights. A player said the words “left” or “right” and pressed the button. The machine (built without sound inputs) then guessed which direction the player said by switching on either the left or right lights. The player registered whether or not the machine was correct. If the machine matched the player, then the machine won a point. If it was wrong, the human won a point. The machine was programmed with a simple algorithm to guess the player’s next choice, an algorithm inspired by the one hinted at in Edgar Allen Poe’s “Purloined Letter” for playing “odds and evens.” As long as the human did not guess the algorithm, the machine stood a good chance of guessing the player’s supposedly random choices. The Mind-Reading Machine, though primitive, showed that the unconscious was semi-regular; it was not a fount of the unexpected. Its workings could, perhaps, even be mimicked by a very simple machine.

2.5.

Information is surprise, Claude Shannon wrote. Information is what we don’t expect, and information is what we don’t have. If we already have all information, there is no need for sending messages. If we already have the information in a message, the message is redundant. A redundant message may indicate more information—”If sent twice, the message is false”—or it may be an antidote to noise. Either way, if a message is redundant, it is expected. Redundancy can be reduced, compressed. Zero, zero, zero, zero… what comes next? With a random string, on the other hand, it is impossible to know what digit comes next. Randomness, then, is pure information. A random string is paradoxically full of information, more information than any English word. A random string, like information, is surprise. Chance, therefore, is also surprise. Pure chance can’t be reduced. It can’t be compressed. It can’t be anticipated. Chance is, as Jean Arp put it, the “deadly thunderbolt.”

When I read Arp’s phrase, I couldn’t help but think of Lee Sedol, 9-dan Go master who lost a five-match tournament this past March to Google DeepMind’s Go-playing program, AlphaGo. As discussed in part one of this article, during the second match, AlphaGo made a move so unexpected and original—Move 37—that Lee left the tournament room in shock. He went on to lose the match. The strategy that AlphaGo built around Move 37 was not taken out of a database of publicly known moves. Move 37 was new to the 5,500-year history of Go. It belonged to a style of play that Go commentators sometimes called “inhuman” and “alien.” In the months that followed, AlphaGo continued to develop this alien style of play, and, according to Demis Hassabis, CEO of DeepMind, Lee has begun learning from the machine.

Let’s go back to our fiction about machines. It is untrue that machines cannot make other machines. From von Neumann replicators to self-assembling robots to the entire machine tools industry, there exists, both on paper and in reality, machines that can make other machines. It’s also untrue that machines cannot change functions. Computers are the best example of multipurpose machines. And as far as microbiology is concerned, there is no need to believe that life operates on any different physical principles than non-life. There remains, though, the question of determinism. Can a machine operate along non-deterministic lines? Asked another way, can a machine be more than the sum of its parts? Can it be creative? The answer is not so much to be found in randomness, in stochastic algorithms, but in learning. Learning—the ability to incorporate experience into decision-making—is what helps separate deterministic and stochastic systems. Life may operate under the same physical laws as non-life, but with a crucial difference: it learns. One does not need consciousness, either—all an organism needs for learning is genetics. At its simplest, genetics represents a record of past successes. On a more complex level, immune systems remember infections and brains record memories. When an AI learns to play Go, when it creates new styles of play as AlphaGo did, it operates on principles abstracted from neuronal reinforcement. AlphaGo learned how to play Go, it invented new strategies of play, and now it is teaching those styles to Lee Sedol. When a system can learn, that system is no longer deterministic. It is adaptive, it is complex, and it is creative.

Originally published in Mousse Magazine 55 + 53, 2016

–

^ To produce Computer Composition with Lines, Noll used a “microfilm plotter,” a cathode ray tube synchronized with a camera. The subjects were presented with photocopy reproductions of the plotter drawing and painting.